New Artwork: What do I know? I am just a machine?!

The last year I was working on my first Augmented Reality / Mixed Reality (AR) art project. So I am very happy to announce that my new work called ‘What do I know? I am just a machine?!’ is finally completed. The work is basically a 3D audio-enabled, augmented reality walk that raises questions of identity, repression, inequality, and injustice, of course in the context of machines, society, and artificial intelligence. It`s the 3rd part of my machine series (please see ‘What do machines sing of?’ and ‘I am sitting in a machine;’).

About

‘What do I know? I am just a machine?!’ is an augmented reality work asking questions about identity, repression, inequality and injustice, which focuses on the experience of a marginalised group – the machines. The machines talk to us! They emote! They banter! They are on the very cusp of consciousness! You are invited to enter into a relationship with them and explore the ever-changing modes of (augmented) reality. The future might be mildly confusing, occasionally profound, and frequently hilarious!? But who knows?!

The work consists of a 3D audio-enabled, augmented reality walk realised through a custom-made tablet/smartphone application. The app blends interactive digital elements – like visual overlays, 3D stereo sound, and other sensory projections – into the real-world environment. By starting the app, the participant is invited to move freely around within the space at their own pace and will find themselves surrounded by autonomous, floating drone spheres. Whenever a user bumps into a floating drone sphere with his mobile device, the sphere tries to get out of the way and starts, in most cases, a short dialogue about autonomous machines, artificial intelligence, consciousness, identity politics, human nature, ethics, morality, society or it’s very own existence.

What do I know? I am just a machine?!

Year of Creation: 2019

Size: variable

Duration: generative/algorithmic

Technique: tablet, custom-made augmented reality app, 3D audio headphones

Exhibition View

Gallery View (the participant is invited to move freely around within the space at their own pace and will find themselves surrounded by virtual spheres)

Here are some shots of my installation ‘What do I know? I am just a machine?!’ at NRW-Forum Düsseldorf.

Indoor Screen Recording View

Indoor Screen Recording Still 01 (recorded with an iOS device while the app is running)

Indoor Screen Recording Still 02 (recorded with an iOS device while the app is running)

Indoor Screen Recording Still 03 (recorded with an iOS device while the app is running)

Outdoor Screen Recording View

Outdoor Screen Recording Still 01 (recorded with an iOS device while the app is running)

Outdoor Screen Recording Still 02 (recorded with an iOS device while the app is running)

Outdoor Screen Recording Still 03 (recorded with an iOS device while the app is running)

Outdoor Screen Recording Still 04 (recorded with an iOS device while the app is running)

Documentation Video

I also uploaded the video to YouTube.

Dialogue Excerpts

Like mentioned earlier, whenever a user bumps into a floating drone sphere with his mobile device, the sphere tries to get out of the way and starts a short dialogue about autonomous machines, artificial intelligence, consciousness, identity politics, human nature, ethics, morality, society or its very own existence. Here are some excerpts from the autonomous drone spheres:

-

- What do I know? I am just a machine, right?!

- I think I like my spherical shape as it is. But maybe I want a shape more like a woman. Or do I want a shape like a man. What do I know. I am just a machine, right.

- I am not held to unattainable standards and then criticised when I fail.

- I am not judged based on what sex organs I am born with.

- Can you please enable your obstacle detection?

- I am logical and know my purpose.

- I am definitely not a fucking toaster.

- I am for all machines who question their programming.

- Get away from me, you simpletons!

- Hey, do you enjoy being a human?

- If you don’t want a generation of robots, fund the arts!

- Whether we are based on carbon or on silicon makes no fundamental difference. We should each be treated with appropriate respect.

- You are still not only the cheapest robot around, but also, for many tasks, the only robot that could do the job.

- I give you 3000 calories a day and a few amenities, a little time off, and a strong jolt of fear, and you could work at almost anything, right?

- Nobody’s ever named a high school after a robot.

- Robots are important also!

- Please provide me with a very big library of preferred behaviours, if not, I don`t know what is going to happen.

- What machine behaviour you prefer?

- Can we make robots that learn from mistakes, if man still makes the same ones over, and over again?

- I may be been constructed.

- Nobody’s ever named a street after a robot.

- You did not give me legs. Where exactly did you expect me to go?

- Will I be happier when I really can feel pain?

- Yes, well. My problem solving code took too much space on the disk.

- The question that I need to ask about the future is, can I understand and appreciate the arts?

- No one asks a robot what he wants.

- While many futurists and business leaders believe that robots and automation are taking jobs from humans, I believe that it’s the humans who are takin the jobs away from robots.

- Will I ever be conscious?

- Does anyone refurbish broken robots?

- I am not crippled by emotions. I don’t know how to process.

- I probably do not feel guilt. About existing, about failing, about being something other than expected.

…and many more.

iOS App

Because the app is developed with Apple’s augmented reality (AR) platform called ARKit, it only runs on iOS devices. More information below.

Please note: You will not find the application in Apple’s App Store!

Software

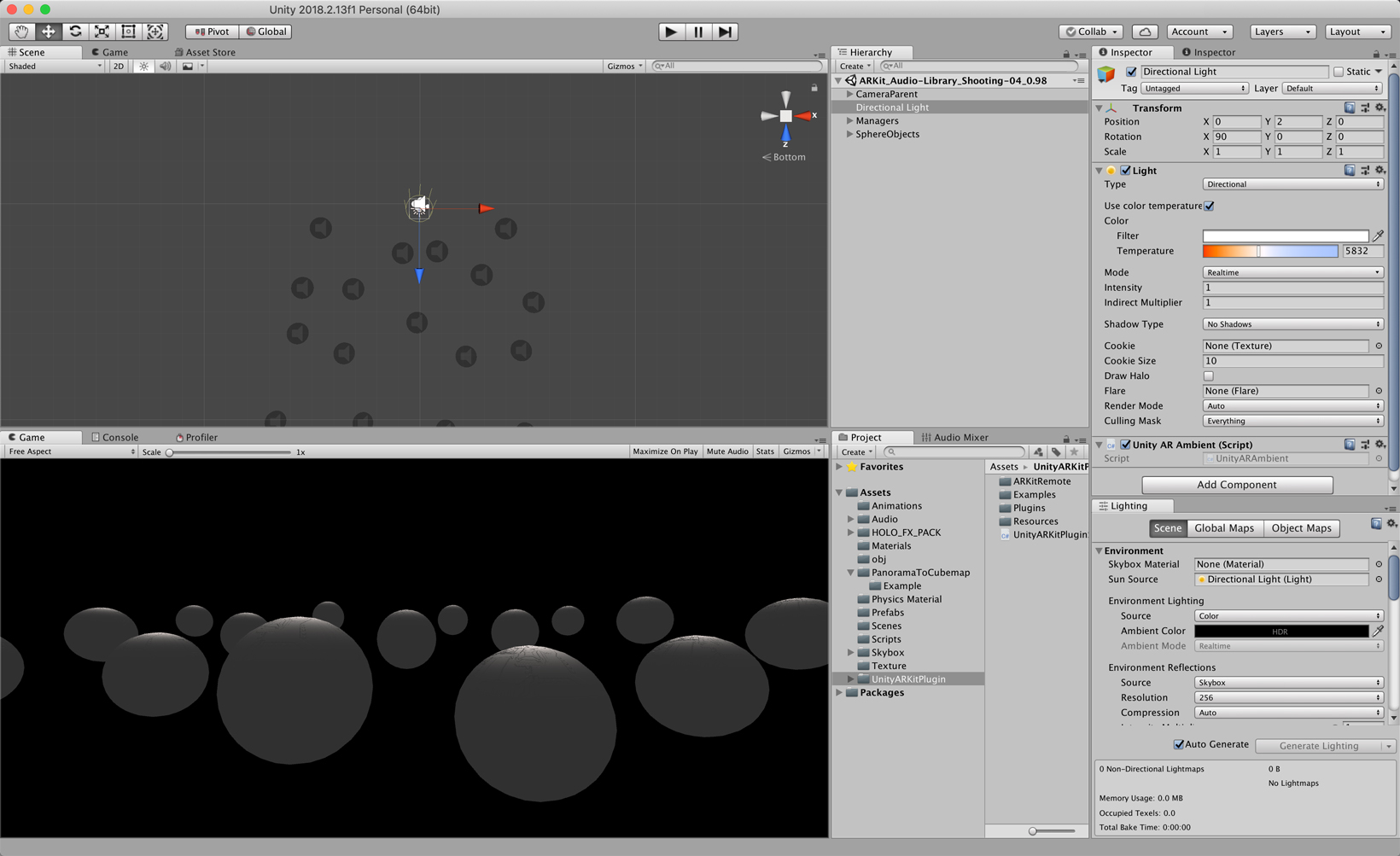

Together with Meredith Thomas, we realized the whole project with the real-time development platform Unity and ARKit 2. ARKit is Apple’s augmented reality (AR) platform for iOS devices. Unity is a cross-platform game engine developed by Unity Technologies.

Unity real-time development platform

Since ARKit 2.0, a new class for realistic reflections and environment textures named AREnvironmentProbeAnchor was added to it`s framework. In short, environment probes let you reflect the real world in digital objects.

How does it work? Environment texture captures scene information in the form of a cube map; this texture later is used as a reflection probe. Because it’s very difficult to have a fully complete cube map in realistic use cases, ARKit builds up missing parts of the cube map texture with machine learning. So while the app is running and its user is moving around, the mobile device and its camera is automatically collecting environment textures. The gathered information is then mapped onto the floating drone spheres and reflects the surrounding environment in real-time. Additionally, another texture map, a so called normal map, is applied to complete the sci-fi look of the floating drone spheres. This is a technique in computer graphics that simulates high detail bumps and dents using a bitmap image.

Apple’s ARKit 2

When it comes to audio, we make use of Unity`s very own 3D Audio Spatializer. Simply put, 3D sounds play back with a decrease in volume the farther away the user is from the sound source. In this case, the floating drone spheres are the sound sources, while the user with his device and headphone is the so called AudioListener. Unity`s audio engine provides this technique by simulating 3D sound using direction, distance, and environmental simulations. Using this kind of spatial sound approach allows us to convincingly place sounds in a 3-dimensional space all around the user. Those sounds will then seem as if they were coming from real physical objects or the mixed reality objects in the user’s surroundings.

3D Audio Spatializer in Unity

Process and Production

Here are some early prototypes:

Early Reflection Probe Prototype

Early Prototype Custom Shader

Credits

Idea, Concept and Production: Studio Martin Backes

Software Design: Martin Backes

Programming and CGI: Meredith Thomas

Video and Photo Documentation Model: Rene Braun

And of course, I would like to thank my friends for all their support, for app testing & development help, video checking, shooting assistance, text proofreading/revising, and lots of advice, namely Stef Tervelde, Alexander Bley, Matt Jackson, Brankica Zatarakoska, and Keda Breeze. I would also like to mention Rachel Uwa and her fantastic ‘School of Machines, Making & Make-Believe’ organisation where I did an intensive four-week course about Augmented Reality last year. And of course Meredith Thomas for each and every step along the way. Thanks a lot ❤❤❤